Is the analytical result you are reporting valid? Maybe the ones from yesterday or last week were, but will the ones from today also be? Will the results from your tray of 70 samples have to be thrown out and the analyses be run again? This month we talk about avoiding problems through routine maintenance and verifying that your instrument is working through the use of a performance verification method.

All things in the sample path in GC have finite lifetimes and are subject to failure. They work as expected (as they did when the method was validated, calibrated, or last set up) only temporarily (sometimes it seems randomly so). The danger is that due to changed performance, the analytical result can be flawed. False positives, false negatives, inaccurate quantification, any number of problems can manifest.

Do you know when your system needs maintenance? Do you wait until you get obviously wrong results? Do you perform maintenance on some regular basis (based on number of samples run, number of days or months, etc.) before a problem arises? Do you know the system is working as expected in the first place?

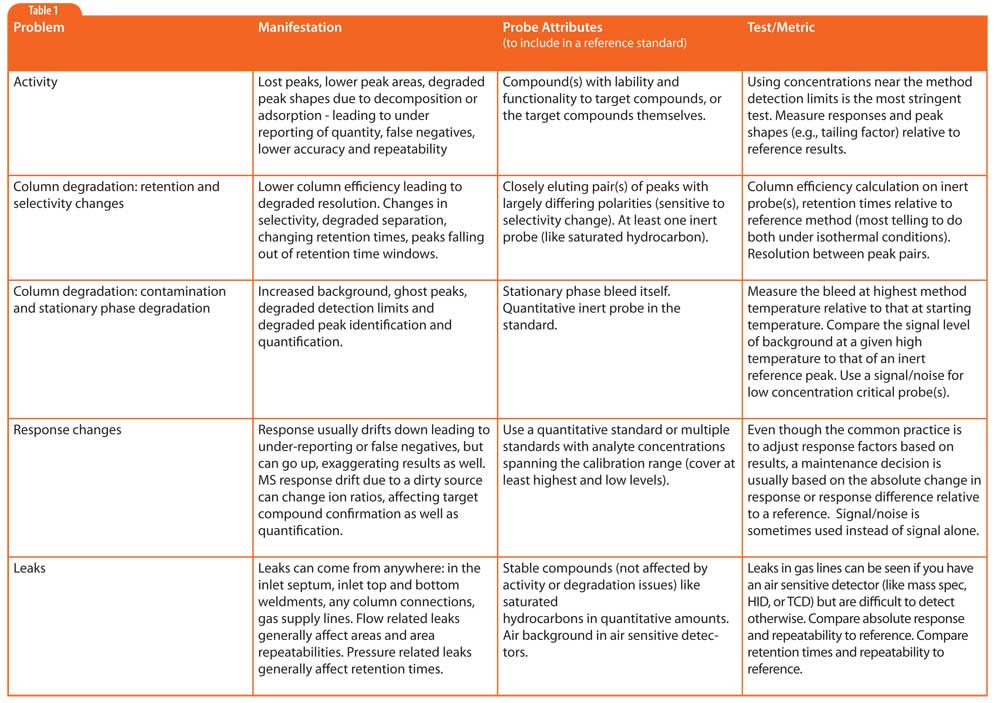

Table 1: A partial list of problems that a proper performance verification standard and method should be able to detect.

I have spoken to people who never knew their instrument had stopped working because the sample chromatograms showed no “impurity peak”. In some of these cases, the detector was off. In others, fittings were loose on the inlet or detector, or they were operating in split mode instead of splitless. In all cases, product quality was passing specifications because their quality tests were based on a negative result (on-spec product had no or minimal impurity peak).

Yet another story I heard was of Product failing a purity testing because of ever increasing ghost peaks from degraded stationary phase.

Of course none of these problems would happen with you. You are careful. As careful as you are, do you know how long your liner deactivation lasts before inlet activity increases and you start to lose recovery of labile compounds? Do you know when your detector jet is getting dirty, changing flame chemistry and reducing dynamic range (and introducing error in you error percent report)? Do you know when you have a loose ferrule introducing air into your MS source and changing ion ratios and/or response factors?

More subtle changes affecting results can include:

- random losses from a failing septum (one injection it seals, the next it leaks, venting some sample)

- a leaking ferrule at the inlet base that changes its sealing characteristics every time the GC oven temperature cycles

- debris in the inlet that selectively changes recovery of certain types of compounds over others

- graphite extruding into the inlet from an over tightened nut, selectively changing solute recoveries

So how do you know if your system is functioning as it should be? The easiest way is to run a reference standard on a regular basis that has been designed to interrogate the parts of the system that can affect the analytical results. Such a standard should allow you to check response, activity, peak shape, efficiency, selectivity; anything that would be important to that specific method.

This is a common practice in the environmental analysis business where approved methodology frequently includes the requirement to run a series of standards to establish system functionality prior to running (or reporting results from) samples. Almost always one must also run blanks to check for carryover and “check standards” every X samples to verify that the system continues to work throughout the total sequence of samples. Not all methods require this level of attention, but certainly methods that are legally defensible (e.g., forensic analyses, environmental analyses), have human health impact (e.g., pharmaceuticals, food safety, plant safety) or relate to assessment of high-value products should have method quality metrics and a requirement to verify performance in the same time frame as sample results are reported.

Table 1 is a partial list of problems that a proper performance verification standard and method should be able to detect. The reference method should allow not only evaluation of absolute values (areas, peak heights, peak tailing, retention times, S/N, etc.), but also repeatability of the measurements (run-run variability on same instrument) because a decrease in repeatability is often an indicator of system problems itself. Many laboratories use one or more of the method’s calibration standards for performance assessment, but for a system that is used for multiple methods, sometimes an independent performance standard and method can be more useful.

One should set an expectation for the allowed variation in each metric beyond which maintenance/correction should be done. For example, if absolution retention time of peak X drifts by more than 0.30 min, then column maintenance should be done. Or if the response factor of labile compound Y at the low calibration level is less than 80% of the response factor of the high standard, the inlet liner should be replaced and column head trimmed. Metrics and limits are method specific and usually are best developed within the initial method development and validation study.

Some laboratories, in addition to performance verification methods, have more proactive practices that fall into the bucket of Preventative Maintenance. Every 10 tanks of He, they change the hydrocarbon and oxygen traps. Every 250 injections they change the septum. Every 2500 injections they replace the syringe. Every day before running a tray of samples, they replace the liner and trim the column. These practices are designed to avoid problems before they can affect the analytical results. However you still need to run a performance check to verify that the routine maintenance was done correctly (no loose septum, liner put in upside down, column inserted in the correct position, etc.).

The bottom line is that if you are running a method that was designed and validated to give an accurate result, it is your responsibility to know if your system is running as expected before you report the result. Instituting and following reasonable practices of routine preventative maintenance and performance verification testing are critical parts to ensuring that your results are valid.

This blog article series is produced in collaboration with Dr Matthew S. Klee, internationally recognized for contributions to the theory and practice of gas chromatography. His experience in chemical, pharmaceutical and instrument companies spans over 30 years. During this time, Dr Klee’s work has focused on elucidation and practical demonstration of the many processes involved with GC analysis, with the ultimate goal of improving the ease of use of GC systems, ruggedness of methods and overall quality of results. If you have any questions about this article send them to techtips@sepscience.com