In the last instalment of HPLC Solutions #112, we looked at one of the two most common ways to filter the detector signal to reduce baseline noise – the detector time constant. The second technique is to adjust the data system data rate. The data rate is the number of data points the data system gathers each second. With today’s UHPLC systems that generate very narrow peaks and for data systems that must also accommodate peaks from capillary GC separations, many data systems will collect data at 100 Hz or faster.

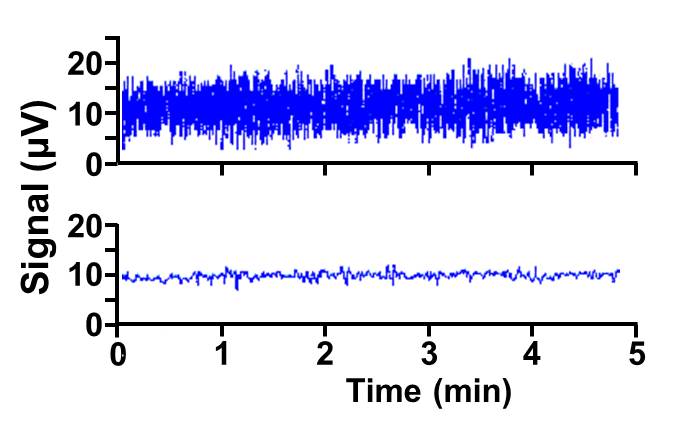

If the data rate is set too high, the signal is not enhanced, but the noise is, resulting in noisy baselines. An example is seen in the top trace of Figure 1, where the high-frequency noise unnecessarily broadens the baseline when a data rate of 15 Hz is used. By slowing down the data rate to 1 Hz, the lower plot of Figure 1 is obtained. In theory, the reduction in noise should change with the square root of the sampling rate, or about 4-fold for the present case, which is what is seen. A further advantage of reducing the data collection rate is that less data storage space is required. In today’s world with multi-terabyte hard disks, we don’t think much about storage space, but with high-speed data acquisition, the amount of space required is not trivial.

Figure 1

As with the detector time constant (HPLC Solutions #112), the data rate can be thought of as averaging the signal over time. Thus, if the data rate is too slow, the noise may be reduced, but the signal quality may suffer, too. For example, the peaks may become shorter and broader, so that both sensitivity and resolution can be degraded under some circumstances. The rule of thumb is that 10-20 data points should be gathered across a peak to get a good representation of the peak. In the present case, the 1 Hz data rate would be suitable for peaks 10 sec wide or more. This would be adequate for well retained (k > 2) peaks generated by a 150 x 4.6 mm, 5 µm particle column operated at 1.5 mL/min, but earlier-eluted peaks or peaks from shorter, narrower, and/or smaller-particle columns could be compromised unless a higher data rate were used.

Today’s data systems do a good job of figuring out what settings are best for the integration of a chromatogram. This includes determining the data rate that will be the best compromise of giving a quiet baseline without degrading the peak quality. If you find that the results are not satisfactory, you can override the default data system settings and adjust the sampling rate to fit your needs. Be particularly cautious if you are moving from a more traditional 3- or 5 µm particle, 4.6 mm i.d. column to a ≤3 µm particle column in a 2.1 mm configuration. The reduction in column length, diameter, and particle size each will reduce the peak volume, making data collection settings more demanding.

This blog article series is produced in collaboration with John Dolan, best known as one of the world’s foremost HPLC troubleshooting authorities. He is also known for his research with Lloyd Snyder, which resulted in more than 100 technical publications and three books. If you have any questions about this article send them to TechTips@sepscience.com